Abstract

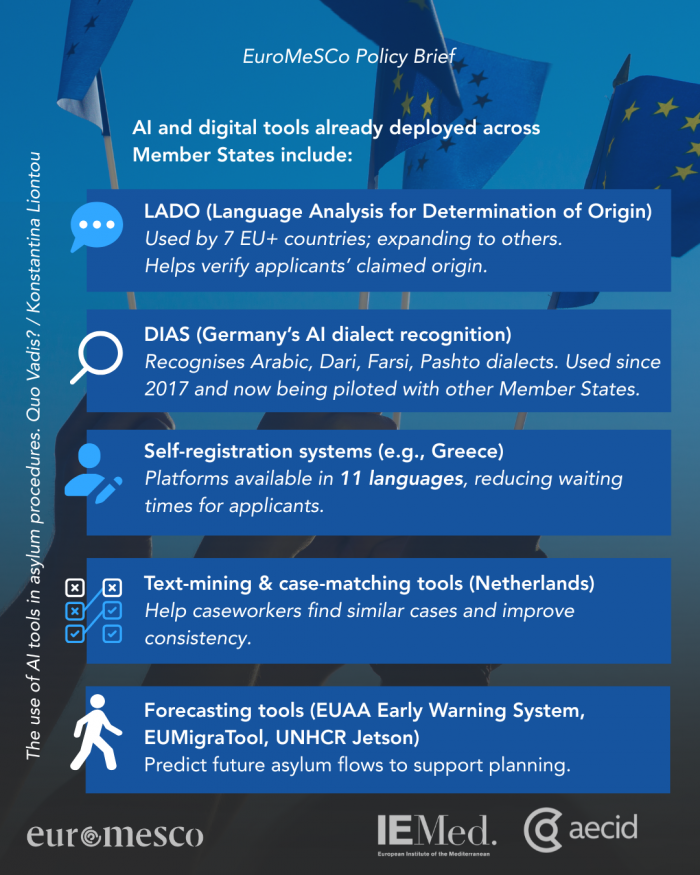

This Policy Brief examines how Artificial Intelligence (AI) tools are increasingly used in asylum procedures across EU Member States, from language and dialect recognition to self-registration platforms, tele-interviews, text-mining and forecasting tools. While these technologies can help overburdened systems process claims more efficiently, their use in such high-stakes contexts raises serious legal and ethical concerns.

The analysis shows that AI can affect credibility assessments, shift decision-making towards opaque, data-driven logics and risk undermining core asylum principles such as individual assessment, the benefit of the doubt and the right to an effective remedy. Inaccurate or biased systems may contribute to wrongful rejections and even breaches of non-refoulement, particularly when language tools misrecognise dialects, training data embed discrimination, or applicants cannot understand or challenge automated outputs.

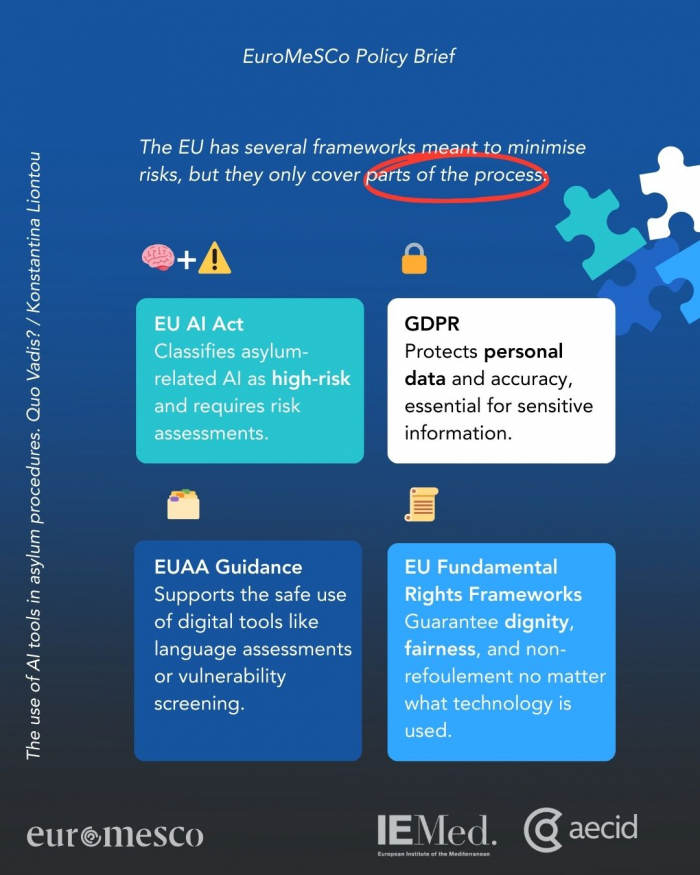

Despite the EU AI Act classifying asylum-related AI systems as “high-risk”, important loopholes remain around transparency, public registries, and human oversight. Combined with uneven deployment across Member States and intrusive data practices, this creates a governance gap in one of the most sensitive areas of public decision-making.

The Brief calls for a rights-based, precautionary approach to AI in asylum procedures, ensuring that digital innovation does not come at the expense of fairness, dignity, or protection.

It recommends:

• Grounding all asylum-related AI tools in clear human rights and asylum law safeguards

• Prohibiting AI systems that undermine non-refoulement, individual assessment or effective remedy

• Requiring independent fundamental rights impact assessments and robust human oversight for high-risk tools

• Ensuring transparency, explainability and judicial review of AI-influenced evidence and decisions

• Strengthening data protection, accuracy and security, particularly for highly sensitive personal data

• Investing in training for caseworkers, asylum officers and NGOs to recognise algorithmic bias and correctly weigh AI outputs